Introduction

I've used Apache HTTP indexing for easy sharing of local files internally at work;

sometimes you just want a link rather than full SMB or NFS access. With a little work

in an .htaccess file, these shares can look pretty nice too. I wanted to

create something similar for sharing my personal projects with the world,

without relying on a third-party service like GitHub or Google Drive. Further,

I wanted the backing storage to be S3 rather than local. VPS storage is pricey

in comparison.

I've been using Digital Ocean Spaces for S3 since the beta back in 2017 and been generally pleased with the performance and ease of use. Having my resources off-server has been very convenient too; rather than rsync'ing to a server, I can use all manner of S3 tools to move my data around from anywhere.

However, S3 makes indexing much more complicated than Apache and local files.

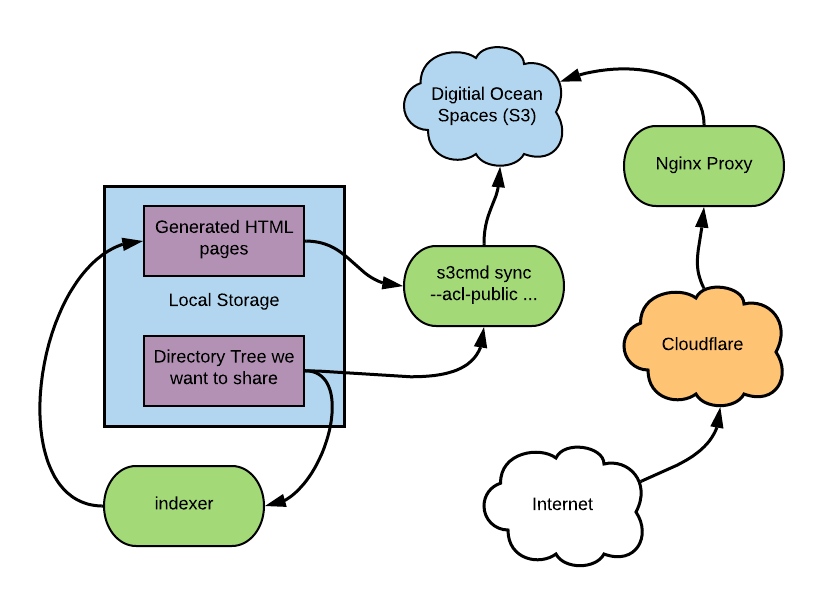

The solution for me is a Haskell program, indexer, to pre-generate index

pages for my data and upload that along side the content in S3. A few hard won

Ngninx proxy_pass and rewrite rules stitch everything together.

You can see the end result at public.anardil.net. Everything you see is static content hosted on S3, including the index pages themselves. The index pages and thumbnails are generated by indexer, the main topic of this post!

Goal

Given a local file system directory, mirror the content to S3 and provide

index.html files that provide a web friendly view to that data. Clicking on

files will redirect you to the file content hosted on S3. Clicking on

directories will open the index.html file for that directory. Lastly, produce

a Nginx configuration that will make this behave like a normal website.

Indexer

To generate the index pages, we need to recursively walk the directory to be shared, collecting name and stat information for each file. At the end, we should be able to write out HTML pages that describe and link to everything in the source directory as it will appear in S3.

Here are some more formal requirements:

HTML

- Contain a listing of each file and child directory in a directory. This listing will be sorted alphabetically. Folders are always first.

- Contain a link to the parent directory, if one exists. This must be the first element in the listing.

- Contain a generic header and footer.

- HTML output directories and files must be structured to mirror the source directory, but may not be written into the source.

- Subsequent runs must only write to HTML files for source directories that have changed.

Scanning

- Collect all information in a single file system pass.

- Sub directories should be scanned in parallel for faster execution.

- Files and directories must be ignorable.

Icons

- Listing elements will have icons based on file type, based on file extension,

file name, or

stat (2)information. Executable files will also a specific icon. - Icons for pictures will be custom thumbnails for those pictures.

- Icons for videos will be custom GIF thumbnails for those videos.

- Any custom thumbnails should only be generated once, and be as small as possible.

Metadata

- Listing elements will have a human readable size

- Listing elements will have a human readable age description instead of a timestamp.

Yikes that's a lot of stuff! Let's walk through each piece's implementation in Haskell and work out some details.

Walking the File System

Walking the file system isn't too hard, but I do need to define what

information to collect along the way and how the tree itself will be

represented. The following is what I went with - the tree is described in terms

of a tree of DirectoryElements, with the only possible oddity being that

everyone knows their parent too. Further, though icons are required for all

elements, during scanning I allow Nothing to be returned to indicate that

post processing is required for thumbnails. This separates scanning issues from

thumbnails generation issues, and allows thumbnails to be generated in batches

rather than piece-meal as they're discovered. Generally, I want to collect

everything I'll need from the file system in one go so we don't have to

sprinkle IO elsewhere in our program other than writing out the final result.

data FileElement = FileElement

{ _fname :: String

, _fpath :: FilePath -- ^ full path to the file

, _fsize :: Integer -- ^ size in bytes

, _ftime :: Integer -- ^ how many seconds old is this file?

, _ficon :: Maybe String -- ^ URI for appropriate icon

, _fexec :: Bool -- ^ is this file executable?

}

deriving (Show, Eq)

data DirElement = DirElement

{ _dname :: String

, _dpath :: FilePath

, _children :: [DirectoryElement]

}

deriving (Show, Eq)

data DirectoryElement =

File FileElement

| Directory DirElement

| ParentDir DirElement

deriving (Show, Eq)

Defining Ord on DirectoryElement lets us satisfy the ordering requirements

easily too. With the following in place, I can use plain old sort to provide

the exact ordering we're looking for on any grouping of files and directories.

instance Ord DirectoryElement where

-- compare files and directories by name

compare (File a) (File b) = compare (_fname a) (_fname b)

compare (Directory a) (Directory b) = compare (_dname a) (_dname b)

-- directories are always before files

compare (File _) (Directory _) = GT

compare (Directory _) (File _) = LT

-- parent directories are before everything

compare _ (ParentDir _) = GT

compare (ParentDir _) _ = LT

File and directory ignoring comes in during the tree walk phase, so no

unnecessary work is done walking or stating these elements. filter after

listDirectory is sufficient. As for parallelism,

Control.Concurrent.Async.mapConcurrently is the work horse, conveniently

matching the type for mapM. Swapping between these was nice for debugging

during development. One note: don't attempt to use System.Process.callProcess

under mapConcurrently! You'll block the Haskell IO fibers and jam up

everything, potentially causing a deadlock. Another reason to process thumbnail

creation outside of scanning.

Age, Size, and other Extras

With basic file information available, meeting the age and size requirements is mostly a matter of presentation.

For age, some Ord and Show instances do the heavy lifting. For getting that

information from arbitrary DirectoryElements, a new class Age works great.

Tree recursion is our friend here, since the age of a directory is the minimum

age of its children. Age would be easiest represented as just an Integer, but

the top level parent directory does not have an age. Further, any particular

parent directory's age is unknown by its children due to the single pass scan

we're making. This could be filled in through post-processing, but it's not

interesting information to present anyway, so I don't bother.

data Age = Age Integer | NoAge

deriving (Eq)

instance Ord Age where

instance Show Age where

class Ageable a where

age :: a -> Age

instance Ageable FileElement where

instance Ageable DirElement where

instance Ageable DirectoryElement where

instance (Ageable a) => Ageable [a] where

Likewise, size follows a similar pattern defining Num and Show instances,

and a Sizeable class to provide a polymorphic size function. The size of a

directory is the sum of it's children's sizes. Computing these sums (and the

minimum age for Age) does incur multiple walks of the tree, but this is the in

memory representation, not the file system, so it's a bit of extra work I'm

willing to pay for implementation simplicity. Yet again, parent directories get

in the way of a simple representation and require a NoSize sum type so we can

correctly distinguish between zero size and no size.

data Size = Size Integer | NoSize

instance Num Size where

instance Show Size where

class Sizeable a where

size :: a -> Size

instance Sizeable FileElement where

instance Sizeable DirElement where

instance (Sizeable a) => Sizeable [a] where

instance Sizeable DirectoryElement where

Icons and Thumbnails

There are a couple ways for a file to be assigned an icon. The easiest is by

file extension like .c, .py, and the like. Secondly, by name such as

LICENSE, or some other well known file types that don't have extensions.

Third, the directory scanning phase notes whether a file is executable, so we

can assign those based on that information. The last possibility, other than

assigning a default icon, is to mark the file as needing a custom icon. This is

the case for images and videos which get thumbnails and gifs respectively. Post

processing will collect the FileElements that need icons generated and

delegate to a Python program later back

in main. The output file name is predetermined by a hash of the input file

path. Using Data.Hashable (hash) :: a -> Int lets us keep IO out of this

inline icon processing entirely. But wait! Isn't that a .py you spy?

Unfortunately yes; image manipulation in pure Haskell was going to pull in

literally dozens more external libraries through dependencies and Python

pillow is just too easy.

getIcon :: FilePath -> Bool -> Maybe String

-- ^ nothing indicates that the icon needs to be generated

getIcon fullpath exec

| isImage fullpath = Nothing

| otherwise = Just $ base <> tryExtension

where

tryExtension =

case H.lookup extension extMap of

(Just icon) -> icon

Nothing -> tryName

tryName =

case H.lookup name nameMap of

(Just icon) -> icon

Nothing -> tryExec

tryExec =

if exec

then "exe.png"

else defaultIcon

name = map toLower $ takeFileName fullpath

extension = map toLower $ takeExtension fullpath

defaultIcon = "default.png"

base = "/theme/icons/"

HTML generation

With all the other information provided by previous layers, creating the HTML

pages is a mostly trivial formatting problem. The tree structure of DirElement allows

easy recursion through directories to build the pages. Each specific type

(File, Directory, ParentDir) implements html accordingly to return a

string. buildPages is aware of its location in the file system tree by path,

and can use that to return WritableHtmlPages that main can handle the IO

for. Separating generation from writing is another IO separation win that made

development in the REPL and testing easier.

type WritableHtmlPage = (FilePath, HtmlPage)

type HtmlPage = String

buildPages :: FilePath -> DirElement -> [WritableHtmlPage]

-- ^ build the html for this directory, and all of it's children. this is recursive

buildPages base element =

parent : children

where

parent = (base, htmlPage $ _children element)

children = concatMap recurse (_children element)

recurse :: DirectoryElement -> [WritableHtmlPage]

recurse (Directory c) = buildPages (_dpath c) c

recurse _ = []

htmlPage :: [DirectoryElement] -> HtmlPage

-- ^ creates a single HTML page to index the elements provided, no children

htmlPage es =

htmlHeader website <> concatMap html es <> htmlFooter

where

website = "anardil.net"

html :: DirectoryElement -> String

With our HTML index pages all prepared, we're ready to upload them to S3! Great, but how do we use them there?

Nginx

Digital Ocean Spaces provides HTTPS access to public objects, but that doesn't

get us all the way to a final solution. At the absolute minimum, we need to

redirect requests for / to our starting index.html. To further complicate

things, it doesn't live under /index.html since we separated the index files

from the content. So we need a rewrite rule for this, and it turns out a few

other things as well.

We start with a generic Nginx proxy configuration, and specify a local cache.

I've enabled the CDN in DO Spaces, but I'd still rather cut the request latency where

possible by having Nginx respond directly when possible. This reduces the

request path from Spaces <-> Spaces CDN <-> Nginx <-> Cloudflare <-> Client

to Nginx <-> Cloudflare <-> Client. By passing the Spaces CDN's cache status

back to Cloudflare, this can be reduced to Cloudflare <-> Client in the happy

path, even better.

server {

listen 80;

listen [::]:80;

server_name public.anardil.net;

proxy_cache internal-cache;

proxy_cache_valid any 5m;

proxy_cache_use_stale error timeout updating http_500 http_502 http_503 http_504;

proxy_cache_revalidate on;

proxy_cache_background_update on;

proxy_cache_lock on;

proxy_ignore_headers Set-Cookie;

add_header X-Cache-Status $upstream_cache_status;

proxy_hide_header Strict-Transport-Security;

Time for the / rewrite rule. When a client requests public.anardil.net or

public.anardil.net/, we'll send them directly over to our root index.html.

location = / {

proxy_pass https://anardil-public.sfo2.digitaloceanspaces.com/share/.indexes/index.html;

}

Our backing storage, S3 doesn't have directories at all, so there isn't

anything to point to. And regardless, what we really want is to point to the

index.html for that directory so the user can see what's in it. So for

instance public.anardil.net/media/ needs to rewritten to

.../.indexes/media/index.html. The trailing / in the rule matches

directories of this format. Note also that Digital Ocean Spaces are not

tolerant of double slashes; ie .../.indexes/media//index.html would return

403 "Forbidden", really meaning "object not found" so the exact replacement is

important.

location ~ ^/(.*)/$ {

proxy_pass https://anardil-public.sfo2.digitaloceanspaces.com/share/.indexes${request_uri}index.html;

}

Time for files! The internet is a wild place when it comes to links. Sending a

URL to a friend doesn't mean that it's going to be sent to their browser in the

exact same format. For instance, iMessage will helpfully convert

public.anardil.net/media/ to public.anardil.net/media which will break our

rule above. media is a directory, not a file, but without the trailing slash

it'll be tried as a file. To handle this, we can use recursive error pages to

reattempt the URL provided with a modification. If a "file" isn't found (S3

returns 403), add a slash and try it again as a directory. The result isn't

actually an error page at all if we're successful, but the page the user wanted

all along. If the request really is /bogus, the user will get a 403 from the

rewritten /bogus/, which is perfectly fine.

location ~ ^/(.*)$ {

proxy_intercept_errors on;

recursive_error_pages on;

error_page 403 = @finder;

proxy_pass https://anardil-public.sfo2.digitaloceanspaces.com/share${request_uri};

}

location @finder {

rewrite ^/(.*)$ /share/.indexes/$1/index.html break;

proxy_pass https://anardil-public.sfo2.digitaloceanspaces.com;

}

Summary

It's been quite a journey, but we now have a static, indexed representation of our local file system directory shared with the world and backed solely by S3! Haskell let us describe the problem in terms of interacting types, and be explicit in limiting IO side effects to specific locations. Our Nginx server shunts all responsibility to S3 or a local cache, making performance a breeze on top of the general advantages of static content. With cron or devbot orchestrating, we can run this periodically and share files with others by dropping them in a local folder; pretty sweet.

Thanks for reading!